🚀 TL;DR (For the Impatient Creators)

- Frame Pack is a free, open-source, offline AI video generator that runs on most consumer GPUs.

- You can generate 1–2 minute videos from just a single image and a short prompt.

- It works on Windows, requires an NVIDIA GPU with 6GB+ VRAM, and comes with a one-click installer.

- No censorship, no cloud costs, and surprisingly good quality.

I’ll show you:

- The best video results I got with it (with links and examples).

- Exactly how to install it, optimize it, and write prompts for it.

Let’s dive into the rabbit hole.

🎥 First, Just Watch This

These were all made offline. On my own machine. From just one image and a single sentence.

The results are so cinematic, you’d never guess they didn’t come from a pro animation studio.

What Is Frame Pack and Why Is It a Game-Changer?

Frame Pack is an open-source tool that allows you to:

- Animate any image into a full-length, consistent video.

- Run entirely offline, using just your GPU and a Gradio interface in your browser.

- Bypass cloud limitations (no paywalls, no censorship).

- Customize video length, style, motion, and fidelity.

And the kicker?

Frame Pack only needs 6 GB of VRAM. That’s laptop territory.

The original model it’s based on, Tencent’s HunYuan, needed a jaw-dropping 60–80 GB. The creators of Frame Pack pulled off some engineering wizardry to compress that into a tool anyone can use.

✨What It Can Do (and What It Struggles With)

✅ It excels at:

- Smooth dancing sequences

- Kung fu / martial arts flows

- Pixar-style 3D animations

- Anime characters with facial expressions

- Cinematic walk cycles

- Painting-style animations with animated smoke, background, etc.

❌ It still struggles with:

- Hyper-detailed hand/finger motion (though toggle settings can help)

- Extreme facial expressions (crying, screaming, etc.)

- Ultra-fast action (like hyper-realistic boxing, still possible, but blurry)

Pro Tip: Always specify motion clearly in your prompt. Words like “fast movements,” “graceful,” or “high action” make a huge difference.

How to Install Frame Pack on Your PC, The Complete Step-by-Step Guide

Let’s get you up and running

Step 1: System Requirements

- OS: Windows 10 or 11 or Linux (but we are going to foucs on Windows in this article)

- GPU: NVIDIA with at least 6 GB of VRAM

- RAM: 16 GB recommended

- Disk Space: ~40 GB required

Step 2: Download Frame Pack

- Go to the official GitHub repo (do not use copycat sites!):

👉 Frame Pack GitHub — Official - Download the 7z one-click installer (~1.7 GB). >>> Click Here to Download One-Click Package (CUDA 12.6 + Pytorch 2.6) <<<

- Extract it to any folder you like.

4. Double-click run.bat and wait for the model weights to install (~30GB).

First Launch:

- The first time you run it, it will download all required models and dependencies.

- Be patient, it takes time but only happens once.

- You’ll get a local Gradio interface that opens in your browser.

(Optional but Powerful): Speed Up with Flash Attention

Want faster renders?

- Go into the

system/pythonfolder inside your framepack. - Open a terminal (type

cmdin the folder bar). - Check Python, PyTorch, and CUDA versions:

python.exe --version

python.exe -m pip list - Go to this site with prebuilt Flash Attention wheels.

- Download the version matching your setup.

- Install via terminal:

python.exe -m pip install "path-to-wheel.whl" - Boom, faster generations, with solid quality.

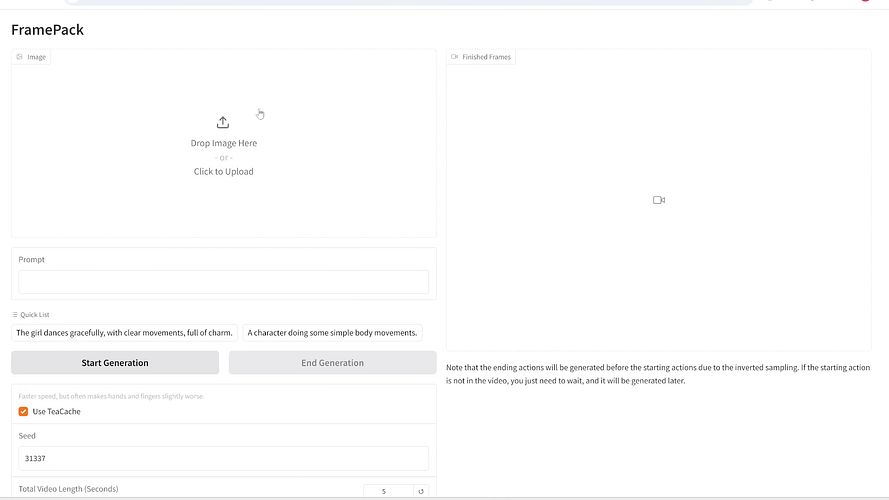

Interface Overview, Your AI Movie Studio

Once loaded, here’s what you’ll see:

- Upload image: Your starting point (photo, 3D render, anime, etc.)

- Prompt box: Describe the motion you want

- Video length: Up to 120 seconds

- Settings:

- Steps: Quality vs. speed (15–25 is solid)

- TCH toggle: Faster generation vs. hand/finger clarity

- CFG scale: Prompt adherence (default = 10)

- MP4 Compression: 0 = lossless, 16 = decent balance

Pro Prompting Tips

- Be specific: “The man walks slowly and confidently, smoke billows in the background.”

- Add pacing: “She turns her head slowly, then smiles.”

- Use action cues: “Characters argue furiously, hands gesturing, mouths moving fast.”

Common Issues (and Fixes)

Problem: Hands are blurry

Fix: Turn off TCH toggle

Problem: Out of memory

Fix: Enable “Preserve GPU memory” and reduce video length

Problem: No motion

Fix: Be clearer in your prompt, specify every motion

Final Thoughts: This Is the Future of AI Video

What Stable Diffusion did for art, Frame Pack is doing for cinematic motion.

And the fact that it runs offline, requires no cloud, and works on consumer hardware is a massive leap for indie creators, animators, filmmakers, and storytellers.

You’re no longer limited by your budget. You’re only limited by your imagination.

Thank you for being a part of the community

Before you go:

- Be sure to clap and follow the writer ️👏️️

- Follow: X | LinkedIn | YouTube | Newsletter | Podcast | Differ | Twitch

- Start your own free AI-powered blog on Differ 🚀

- Join our content creators community on Discord 🧑🏻💻

- For more content, visit plainenglish.io + stackademic.com

Post a Comment